Artificial Intelligence (AI) and Machine Learning (ML) are certainly not new industries. As early as the 1950s, the term “machine learning” was introduced by IBM AI pioneer Arthur Samuel. It has been in recent years wherein AI and ML have seen significant growth. IDC, for one, estimates the market for AI to be valued at $156.5 billion in 2020 with a 12.3 percent growth over 2019. Even amid global economic uncertainties, this market is set to grow to $300 billion by 2024, a compound annual growth of 17.1 percent.

There are challenges to be overcome, however, as AI becomes increasingly interwoven into real-world applications and industries. While AI has seen meaningful use in behavioral analysis and marketing, for instance, it is also seeing growth in many business processes.

“The role of AI Applications in enterprises is rapidly evolving. It is transforming how your customers buy, your suppliers deliver, and your competitors compete. AI applications continue to be at the forefront of digital transformation (DX) initiatives, driving both innovation and improvement to business operations,” said Ritu Jyoti, program vice president, Artificial Intelligence Research at IDC.

Even with the increasing utilization of sensors and internet-of-things, there is only so much that machines can learn from real-world environments. The limitations come in the form of cost and replicable scenarios. Here’s where synthetic data will play a big part

Dor Herman

The benefits of synthetic data in training AI

“We need to teach algorithms what it is exactly that we want them to look for, and that’s where ML comes in. Without getting too technical, algorithms need a training process, where they go through incredible amounts of annotated data, data that has been marked with different identifiers. And this is, finally, where synthetic data comes in,” says Dor Herman, Co-Founder and Chief Executive Officer of OneView, a Tel Aviv-based startup that accelerates ML training with the use of synthetic data.

Herman says that real-world data can oftentimes be either inaccessible or too expensive to use for training AI. Thus, synthetic data can be generated with built-in annotations in order to accelerate the training process and make it more efficient. He cites four distinct advantages of using synthetic data over real-world data in ML: cost, scale, customization, and the ability to train AI to make decisions on scenarios that are not likely to occur in real-world scenarios.

“You can create synthetic data for everything, for any use case, which brings us to the most important advantage of synthetic data–its ability to provide training data for even the rarest occurrences that by their nature don’t have real coverage.”

Herman gives the example of oil spills, weapons launches, infrastructure damage, and other such catastrophic or rare events. “Synthetic data can provide the needed data, data that could have not been obtained in the ‘real world,’” he says.

Herman cites a case study wherein a client needed AI to detect oil spills. “Remember, algorithms need a massive amount of data in order to ‘learn’ what an oil spill looks like and the company didn’t have numerous instances of oil spills, nor did it have aerial images of it.”

Since the oil company utilized aerial images for ongoing inspection of their pipelines, OneView applied synthetic data instead. “we created, from scratch, aerial-like images of oil spills according to their needs, meaning, in various weather conditions, from different angles and heights, different formations of spills–where everything is customized to the type of airplanes and cameras used.”

This would have been an otherwise costly endeavor. “Without synthetic data, they would never be able to put algorithms on the detection mission and will need to continue using folks to go over hours and hours of detection flights every day.”

Defining parameters for training

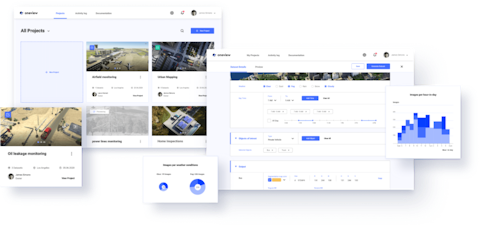

With synthetic data, users can define the parameters for training AI, in order for better decision-making once real-world scenarios occur. The OneView platform can generate data customized to their needs. An example involves training computer vision to detect certain inputs based on sensor or visual data.

“You input your desired sensor, define the environment and conditions like weather, time of day, shooting angles and so on, add any objects-of-interest–and our platform generates your data; fully annotated, ready for machine learning model training datasets,” says Herman.

Annotation also has advantages over real-world data, which will often require manual annotation, which takes extensive time and cost to process. “The swift and automated process that produces hundreds of thousands of images replaces a manual, prolonged, cumbersome and error-prone process that hinders computer vision ML algorithms from racing forward,” he adds.

Attention to detail

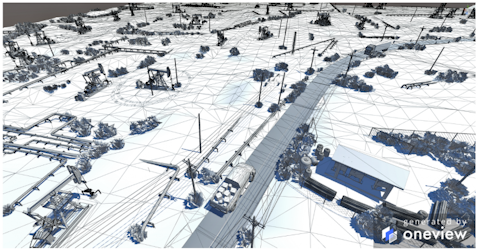

OneView’s synthetic data generation involves a six-layer process wherein 3D models are created using gaming engines and then “flattened” to create 2D images.

“We start with the ‘layout of the scene’ so to speak, where the basic elements of the environment are laid out The next step is the placement of objects-of-interest that are the goal of detection, the objects that the algorithms will be trained to discover. We also put in ‘distractors’, objects that are similar so the algorithms can learn how to differentiate the ‘goal object’ from similar-looking objects. Then the appearance building stage follows, when colors, textures, random erosions, noises, and other detailed visual elements are added to mimic how real images look like, with all their imperfections,” Herman shares.

“The fourth step involves the application of conditions such as weather and time of the day. For the fifth step, sensor parameters (the camera lens type) are implemented, meaning, we adapt the entire image to look like it was taken by a specific remote sensing system, resolution-wise, and other unique technical attributes each system has. Lastly, annotations are added.”

Annotations are the marks that are used to “define” to the algorithm what it is “looking” at. For example, the algorithm can be trained that this is a car, this is a truck, this is an airplane, and so on. The resulting synthetic datasets are ready for machine learning model training.

The bigger vision: Addressing bottlenecks from real-world imagery

For Herman, the biggest contribution of synthetic data is actually paradoxical. By using synthetic data, AI and AI users get a better understanding of the real world and how it works–through machine learning. Image analytics comes with bottlenecks in processing, and computer vision algorithms cannot scale unless this bottleneck is overcome.

“Remote sensing data (imagery captured by satellites, airplanes and drones) provides a unique channel to uncover valuable insights on a very large scale for a wide spectrum of industries. In order to do that, you need computer vision AI as a way to study these vast amounts of data collected and return intelligence,” Herman explains.

“Next, this intelligence is transformed to insights that help us better understand this planet we live on, and of course drive decision making, whether by governments or businesses. The massive growth in computing power enabled the flourishing of AI in recent years, but the collection and preparation of data for computer vision machine learning is the fundamental factor that holds back AI.”

He circles back to how OneView intends to reshape machine learning: “releasing this bottleneck with synthetic data so the full potential of remote sensing imagery analytics can be realized and thus a better understanding of earth emerges.”

Translating synthetic into real-world

The main driver behind Artificial Intelligence and Machine Learning is, of course, business and economic value. Countries, enterprises, businesses, and other stakeholders benefit from the advantages that AI offers, in terms of decision-making, process improvement, and innovation.

“The Big message OneView brings is that we enable a better understanding of our planet through the empowerment of computer vision,” concludes Herman. Synthetic data is not “fake” data. Rather, it is purpose-built inputs that enable faster, more efficient, more targeted, and cost-effective machine learning that will be responsive to the needs of real-world decision-making processes.